Risk Analysis for Complex Systems with Human Factors Component – A Human-centred Framework

Cathy Hua, Geraint Bermingham (Navigatus Consulting)

Abstract

The consideration and assessment of risk often requires analysing complex systems where the human is an important component and the consequence of failure can be significant. The challenge of modelling and analysing these complex systems derives from the variability of the human factors and complexity of the relationships among system components or sub-systems and between the system and the environment. Such variability and system complexity are a challenge to many traditional risk management approaches, which are only suitable for simpler systems that have a stronger engineered or logical component and have limited interactions within and beyond the system boundaries. To effectively conduct risk analysis in complex systems where human factors are a component, a new human-centred system model was developed and used in a recent risk management project by Navigatus Consulting. This new model served as a framework for both guiding risk research design and analyses as well as presenting complex relationships at multiple system levels in a concise and focused manner. This article first introduces the rationale and development of human-centred system model on the basis of a taxonomy of systems, followed by a demonstration of the application of such a new model in a recent risk management project. Future applications and expansions of this new model is also discussed.

Introduction

The first step in an effective risk-management process is to understand the context, and often, that of a known “problem”. Although a description of the “problem” may be available, it may not initially correctly identify the true issue or source of risk. Many risk management studies involve systems with considerable scale and complexity, and/or human factors influence. However, traditional risk management approaches seldom distinguish one type of system from another. This article aims at drawing attention to the unique characteristics of many complex systems, and demonstrate a novel risk assessment framework designed for complex systems where there are strong human factors components.

The following sections first highlight the importance of defining the type of system and the problem. Then a taxonomy of systems is introduced to provide conceptual criteria in differentiating different types of systems, especially System of Systems (SoS), and why these pose a challenge to traditional risk management approaches. Next, a novel risk assessment framework based on a recent project is presented. The future potential applications of such approach is further discussed in the conclusion.

The Concept of System of Systems (SoS)

The process of defining a problem within an organisational context is, by its nature, a case of modelling a problem-system and its context through identifying the processes and the elements relevant to that problem, and drawing interactions and boundaries to locate the core of the problem or its true cause. How a “problem-system” is defined and represented largely reflects the “world view” and assumptions of the things and mechanism relating to that problem, and how they should be considered and analysed. Such “problem-system” (often represented by a model) guide the subsequent information gathering and analyses, as well as exploration, development, and testing of solutions. Analyses built on a “problem-system” that fail to capture the correct boundary, relevant elements and relationships will not lead to a true understanding or solution no matter how reliable the associated analyses may be.

A system is an “organised whole in which parts are related together, which generates emergent properties and has some purpose” (Skyttner, 1995, p.58). However, when scoping a “problem-system”, besides its parts and purpose, there are three distinctive features to consider:

- 1) A system may be open to the influence of its environment (physical, political, social, and organisational)? Along this line, there are “Open Systems” that interact with and/or are influenced by their environment (e.g., a public road system), versus “Closed Systems” that has no or little interaction with the environment (e.g., a turbo engine), (Gershenson & Heylighen, 2005).

- 2) With regard to the relationships among system components: There are “Simple Systems” that may have multiple components, but the relationships among components are more of a linear “action-reaction” fashion that is largely predictable, such as the classical examples in mechanical engineering or physics (e.g., an aircraft). To the contrary, there are “Complex Systems” with at least one non-linear relationship between at least one pair of components, and such systems are often Open Systems (Flood & Carson, 1993).

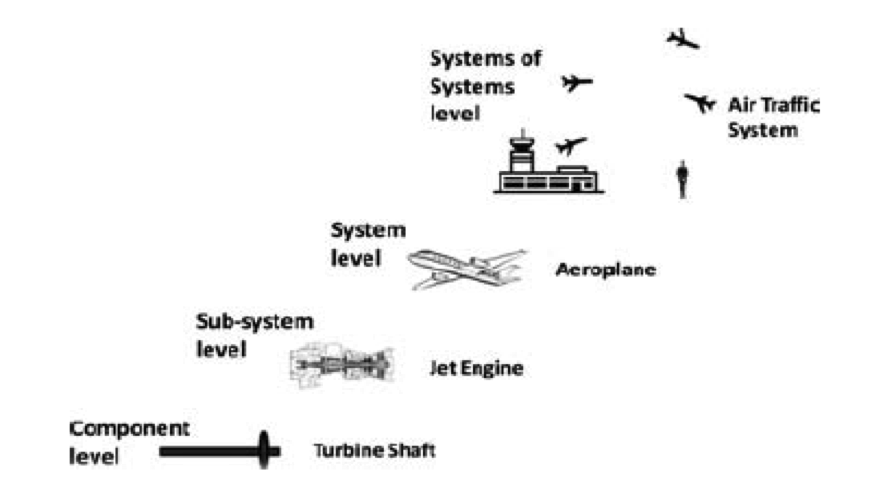

- 3) A system may be functionally independent by itself, or a complex “System of Systems (SoS)” that consists of many disperse and independent sub-systems. In an SoS, the sub-systems are usually developed together evolutionarily and form the “the whole is greater than the sum of its parts” effects in resourcing, performance, and emergent features such as system reliability. Furthermore, there are simpler types of SoS in which the sub-systems are developed together to achieve the same operation goal. This may include complex mechanical engineering systems such as nuclear plants, modern aircraft and spacecraft. This counter-intuitive “simplicity” comes from the fact that the inter-dependencies are designed in and understood. To the contrary, there is also more complex SoS in which each subsystem is not only independently functional but also has its own culture, value, process and goals (Dogan et al., 2011). Compared with other types of systems, this more complex SoS is in fact more frequently encountered and given the human and societal context, effectively omnipresent. It could be any area of activity such as an industry or sub-sector or endeavour with its multiple stakeholders, internal and external markets, economic and political environment. However, it is also this more complex type of SoS that poses challenge to many existing system models and tools used in risk management as discussed in the next section. Figure 1 below provides a simplified illustration of an airport as a complex SoS with different levels of sub-systems. Note that for simplicity, the external environment and broader social and political system is not included in this illustration.

Figure 1.Simplified illustration of an airport as a System of Systems (SoS) with multiple levels

(Source: Dogan et al., 2011)

Why some traditional risk analysis approaches may miss the point

With the classifications of systems in mind, it is valuable to look at what it means to the management of risk when facing a complex SoS.

Suppose you were asked to “identify and profile the key safety risks in the small commercial aircraft and helicopter air transport sector”, how should the problem be defined?

Instead of seeking first to apply only the methodologies listed in such documents as AS/NZS HB436:2013 or AS/NZS HB 89:2013 (Standards Australia International & Standards New Zealand) or indeed most risk management handbooks, the nature of the system itself must first be examined. Checking against the system classifications mentioned above, it will became evident that the small commercial aircraft sector is a complex SoS comprised of a number of operators (companies) who are facing diverse business resourcing (funding, staffing, etc.), operational environments (location, routes, geographical and meteorological conditions), activity types (passenger transport, air ambulance, etc.), operation systems (air traffic control, pilot scheduling, management structures etc.) and a range of engineered systems (aircraft type, communication systems etc.); there are also the shared broader social and political environments, as well as various stakeholders (tourists, hospitals, regulators and regional and central government agencies). All these elements interact in different ways at different levels to create, essentially, a “mega-system”. What exactly should be included in the information collection and analyses stages of a project to understand the risks? How should this large number of variations of system components and relationships be handled?

There are a number of ways to scope and describe such systems, but not all are helpful in setting the focus of analyses. An overly complex model that precisely captures all the elements and unique relationships for a complex SoS tends to be overwhelming for human information processing and is thus of limited value and hinders the analysis process. Using a series of traditional risk analysis approaches such as bow-tie analysis, risk matrix, and fault-tree analysis on sub-system components could probably capture some aspects of the SoS, but such tools are often developed based on the assumptions of closed and/or simple systems, and will thus miss both the non-linear relationships in open systems and the emergent property of the overarching SoS system that cannot be found in any component sub-system. One example of such emergent system property is the safety culture within an operator that together forms the overall sector safety culture; an other example is a systematic flow of mid-career pilots from the sector into airlines that results in a sector-system-wide experience gap; while a third example is the influence of operators to each other in terms of market competition, benchmarking, and the existence or absence of collaboration that brought in synergy and more efficient learning and resourcing.

To be able to efficiently capture such a complex SoS while maintaining focus and conciseness to guide analysis, a different approach was developed and applied to both the small aircraft and commercial maritime sectors by Navigatus Consulting. The example described in the next section is that for the small aircraft and helicopter air transport sector. This sector is as defined under the Civil Aviation (CA) regulatory model as the “Part 135 sector”.

A human-centred model for complex SoS

To effectively model complex SoS to guide research and analyses, the first step taken by Navigatus is to identify the core component that is directly related and most critical to the sectors’ safety risks. Once such a core factor is identified, the other components and sub-systems and the multi-level relationships among them were assessed in relation to how they affect this core factor.

In the case of the small aircraft and helicopter air transport sector project, the next step was to identify the key safety risks and profile these – this was initially done on the basis of both aviation safety literature (e.g., Salas, 2010) and Navigatus’ deep understanding of aviation safety. It was identified that the core factor for safety viewed from both sub-system level (e.g., single aircraft, single operation, single company) and SoS level (the overall Part 135 Sector) is invariably the ‘single pilot’. That is, that in this sector, inverably there is a single pilot making decisions in near-time or real time as s/he interacts with the environment and aircraft control system. In such a sub-system, the pilot as the direct human factor, is both the key decision making entity as well as the relatively less predictable and more variable internal component compared with the engineered elements. Thus, things in this SoS that will influence pilot decision-making and individual state for stable and reliable performance must be the key focus.

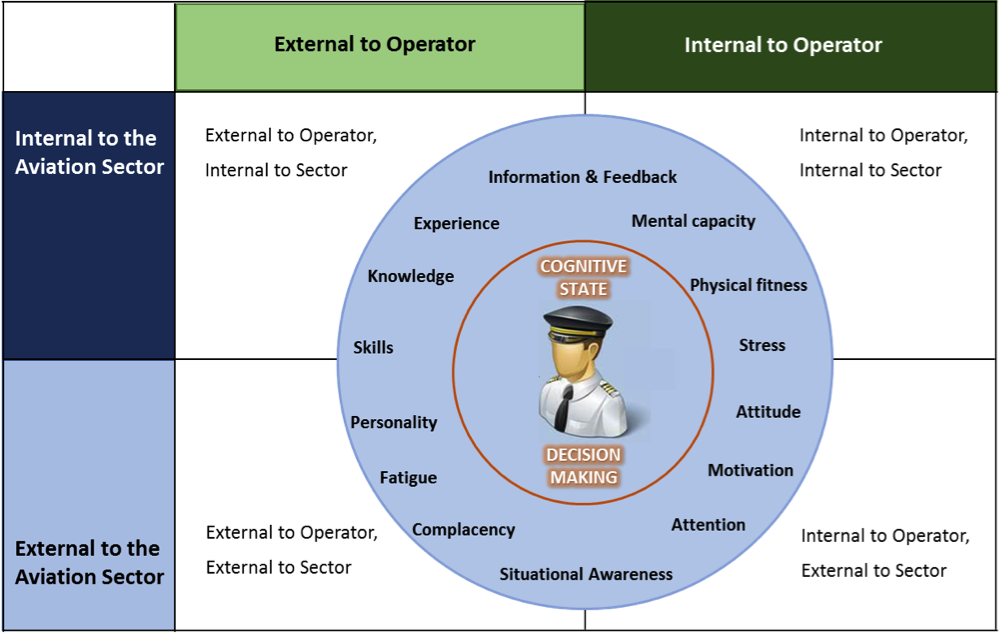

Based on the rationale above, a human-centred model demonstrated in Figure 2 below was initially developed. This working-model was established to serve as the framework of research and analysis, and was thus intentionally designed to be conceptually concise. The model demonstrates a focus on the pilot’s decision-making and performance capability, around which influencing human-factors are identified at multiple levels: the first level is within the person; around cognitive state and decision-making capacity relating to multiple cognitive, psychological, and psycho-physical sub-systems, such as knowledge, experience, stress, attitude, motivation, and information and feedback. Then multiple sources external to the person that influence these sub-systems were hypothesised, such as pilot scheduling (that could influence pilot stress and fatigue), company safety culture (that could for example influence pilot attitudes), safety procedures and rules, and management decisions. Another example is information and feedback, which could come which could occur in the immediate real-time (seconds, minutes), medium-term (hours / days), or long-term (weeks, months years) from the operation system or other people in training or supervision to the pilots, but also include mechanisms that allow the pilots to provide feedback about issues, concerns, questions, and their performance. Therefore, sources of influence to a particular item in the pilot-centred core circle could come from sources existing at multiple levels across different sub-systems and over a range of time frames.

Once the sources of influence to the core factor were identified, these sources were classified along two dimensions: First, it makes practical sense to view the “sources of influence” as being within or outside the operator (company), as these are the immediate higher level systems that provides unique operational environment and resource to the pilots. Such a dimension helps distinguish which influences are within an operator’s control. Next, the aviation industry sector was considered as a second dimension, so as to both acknowledge the emergent qualities across operators and the interactions between sector-wide components, as well as distinguish influences that are within the sector’s control versus those macro-level factors beyond the sector’s control or influence. Along these two dimensions, the sub-systems of this Rule Part 135 SoS are classified into four groups shown in Figure 2.

Figure 2. A Human-centred Model for aviator (Framework)

(Source: Navigatus, 2015)

This human-centred model presented above served as the framework for guiding the task research design and information and data collection and analyses. Through workshops, interviews, site visits, operator and pilot survey, and incident data analysis, the model was further modified with added system components under each broad category described above to highlight some key relationships among components that were identified as critical to the sector’s safety risks. For example, organisational safety culture, training provided at sector-level by training organisations, supervisions provided on the job within the organisation, and pilot outflow to big airlines were some of the key risk factors found. The source of each of such risk factor can be then located in the model in or across the corresponding quadrant(s).

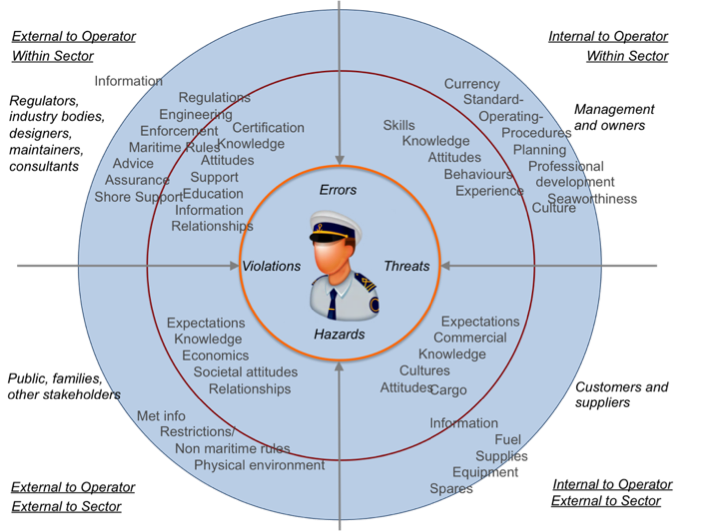

Figure 3. Modified Human-centred Model for mariner

(Source: Navigatus, 2015)

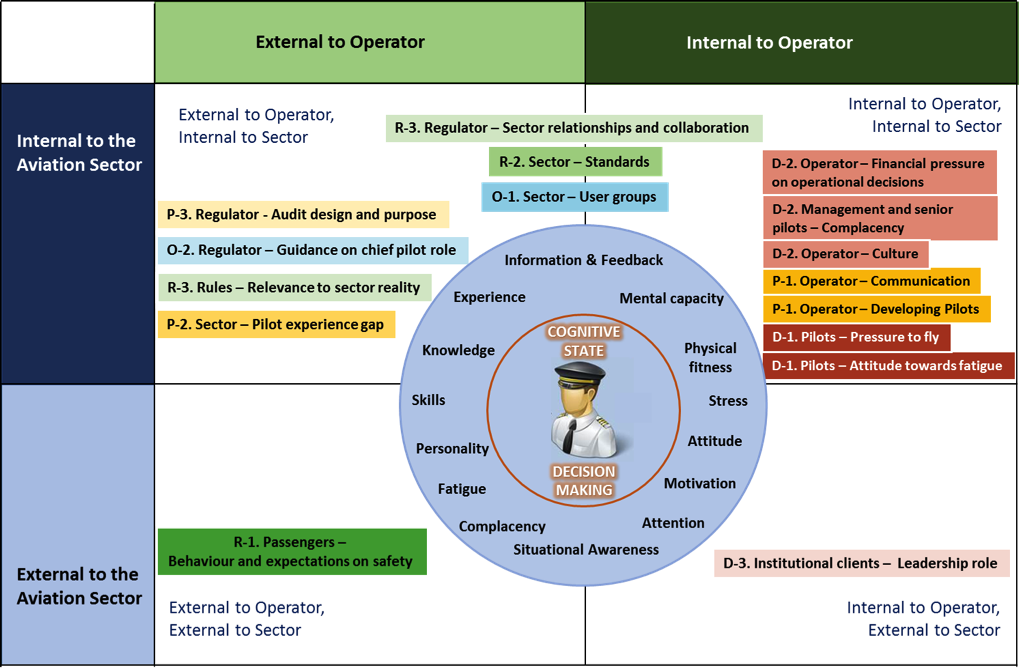

Furthermore, this human-centred model also provided guidance in further listing the key risk factors into risk statements in accordance to 1) their influence to safety; 2) their strength of influence on the core safety factor (the pilot / master). These two dimensions together provided a 3 x 4 matrix comprising of the following categories (Navigatus, 2015). The Model was similarly developed for a subsequent application in the maritime sector (Figure 3). The context is both different and yet similar. In this case the central agent is the vessel master and the variables possibly strongly influenced by interaction with the vessel’s bridge team.

Safety-shaping Contexts:

- Operational context – this includes factors that influence the pilot’s / master’s capacity for operations and decision-making from within the immediate operational context. (e.g., pressure to fly, communication, and pilots’ skills and experience).

- Operators/Organisations – this category refers to factors that exist within a particular operator or within the relevant sector that could influence the immediate operational context mentioned above. e.g., organisational culture, organisational decisions, sector-wide pilot / master experience and supply, and the sector’s adoption of standards.

- External to operators – this category includes factors from outside the operator or the sector but that influence the organisational and sector safety-shaping context. (e.g., Rules, regulator-sector relationship, and institutional clients / customers).

Immediacy of influence:

- Direct (D) – factors that have direct influence on the specified safety-shaping context.

- Proximal-indirect (P) – factors that have relatively indirect and often non-immediate influence on the specified safety-shaping context.

- Distal-indirect (R) – factors that often influence a specified safety-shaping context further removed as an influence than the proximal-indirect factors.

- Safety improvement opportunity (O) – factors that were not raised as a risk, but are considered as potential leverages that could support improvement in safety performance.

Figure 4 below demonstrates the revised aviation model with sources of influences identified and classified according to the dimensions described above. The letters D, P, R, or O before each coloured item in the model correspond to the “Immediacy of Influence” classifications described above. The full model and more detailed risk statements can be found in the original report available on the CAA website:

http://www.caa.govt.nz/assets/legacy/Safety_Reports/srp_part_135.pdf

Figure 4. Final Human-centred Model for Part 135 Sector Risk as research output

(Source: Navigatus, 2015)

A similar figure – as applied to the New Zealand commercial maritime as referenced at:

https://www.safety4sea.com/nz-coastal-navigation-safety-review/

Conclusion

This paper describes an approach to understanding risks within a system-wide problem within a complex SoS that involves extensive sub-system variations and strong human factors components. The complexity, the emergent properties that cannot be found in any sub-system, and the large number of non-linear relationships of the SoS often pose a challenge to traditional risk management approaches that are more appropriate for closed simple systems. The identification of the core of the problem-system and human factors allowed a focused yet multi-level perspective to re-structure the understanding of the components and relationships in this complex SoS. This thus allows factors that could not be found in sub-system-level such as the leadership role of institutional clients, the sector-wide pilot experience gap, and the potential use of sector user group to be fully considered.

Similar system-modelling approaches and frameworks as described in the previous section could have wide application in guiding risk management for other complex SoS with a strong human factors component, such as maritime, finance, investment, health care, and land transport systems. Depending on the objective of problem solving and the feature of the SoS, the core factor may not necessarily have to be human, but human factors should always be considered due to its natural fallibility and human beings’ key role in many SoS. In some cases where there are multiple persons involved, it is more effective to view the human within the SoS by core processes, such as the logistic and resourcing components in a complex production chain comprised of many suppliers, manufacturers, and sellers.

The key learning from this work is the value of understanding the feature of the system, while retaining focused and relevant to the objective of problem solving. Such idea is presented as part of our drive to add value to the ever-evolving world and develop methodologies to overcome the limitations of existing approaches and continuously learning from other disciplines such as system engineering and ergonomics.

References

Dogan, H., Pilfold S. A., & Henshaw, M. (2011). Ergonomics for systems of systems: The challenge of the 21st century. In Institute of Ergonomics & Human Factors, Annual Conference, & Anderson, M. (Eds.), Contemporary ergonomics and human factors 2011 (pp. 485–492). Boca Raton: CRC Press/Taylor & Francis. Retrieved from http://www.crcnetbase.com/isbn/9780203809303

Flood, R. & Carson, E. (1993). Dealing with complexity: An introduction to the theory and application of systems science (2nd Ed.). Plenum Press, New York.

Gershenson, C., & Heylighen, F. (2005). How Can We Think Complex? In K.A. Richardson (Ed.), Managing organizational complexity: Philosophy, theory, application (pp. 47–62). Greenwich, CT: Information Age Publishing.

Navigatus Consulting Ltd. (2015). Part 135 Sector Risk Profile 2015. Retrieved from: http://www.caa.govt.nz/assets/legacy/Safety_Reports/srp_part_135.pdf

Salas, E. (Ed.). (2010). Human factors in aviation. Burlington, Mass.: Academic Press.

Skyttner, L. (2005). General Systems Theory: Problems, perspectives, practice (2nd Ed.). World Scientific Publishing Co. Pte. Ltd., Singapore.

Standards Australia International, & Standards New Zealand. AS/NZS HB89:2013. Risk management: guidelines on risk assessment techniques. Sydney/Wellington, https://shop.standards.govt.nz/catalog/89:2013(SA%7CSNZ%20HB)/scope

Standards Australia International, & Standards New Zealand. AS/NZS HB 436:2013. Risk management guidelines – Companion to AS/NZS ISO 31000:2009. Sydney/Wellington: https://shop.standards.govt.nz/catalog/436%3A2013%28SA%7CSNZ+HB%29/view

Comments

No comments yet.